by | Sri Widias Tuti Asnam Rajo Intan | sriwidias@might.org.my

Artificial intelligence (AI) no longer exists only in the realm of science fiction, but has been advancing and transforming in real life the way humans interact with machines and how it assists in daily human activities. Today, AI is coming of age and is actively improving business processes and strategies. Many enterprising companies have started using AI through a combination of automated data science, machine learning, as well as modern deep learning approaches like data preparation, predictive analytics, and process automation.

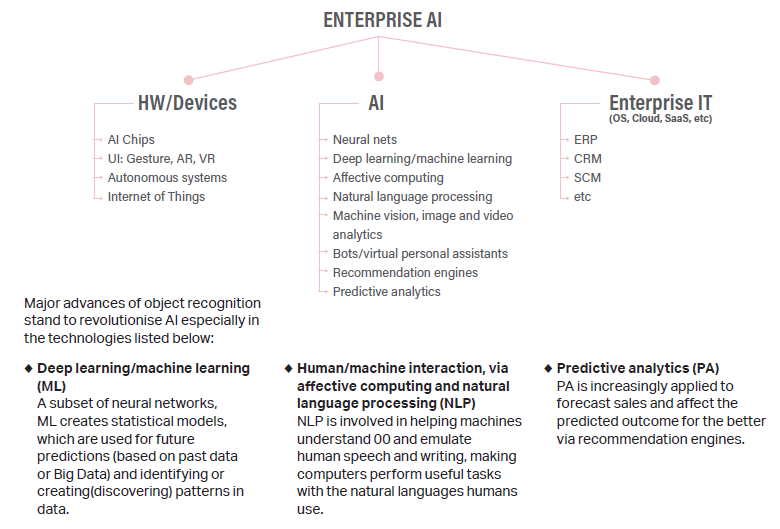

The convergence of new hardware and devices, emerging AI technologies, conventional enterprise IT and related business models like open source, cloud computing, and Software-as-a- Service (SaaS), is covered under the umbrella known as Enterprise AI, a landscape of enabling technologies, key companies and events. One of the technologies in AI being rapidly applied is object recognition where it continues to evolve combining the science of artificial intelligence, computer vision and cloud based networks providing greater recognition accuracy of objects and entities. It is the area of AI concerned with the abilities of robots and other AI implementations to recognise various things around us.

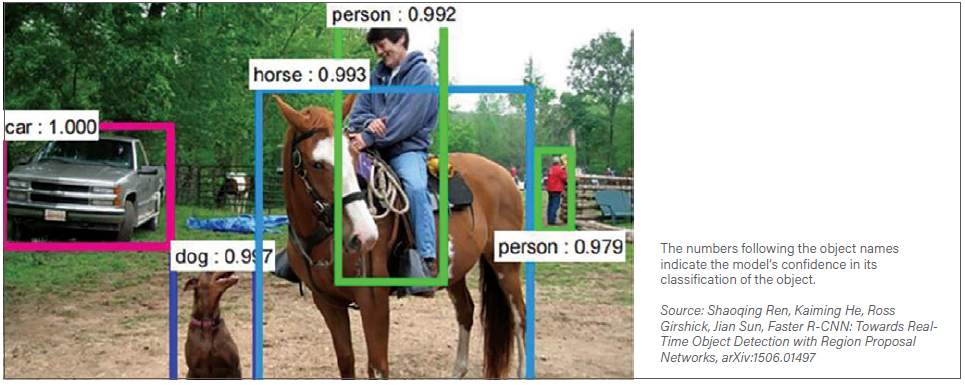

Object recognition allows robots and AI programmes to pick out and identify objects from inputs like video and still camera images. Methods used for object identification include 3D models, component identification, edge detection and analysis of appearances from different angles. Robots that understand their environments can perform more complex tasks efficiently.

THE BIRTH OF OBJECT RECOGNITION

Object recognition has recently become one of the most exciting fields in computer vision and AI. The technology determines what objects are and where it is in a digital image. However, the ability of immediately recognising all the objects in a scene seems to go way back to 1957, when computer vision pioneer and psychologist Frank Rosenblatt successfully created the Perception machine to simulate natural learning with an artificial neural network. By mimicking the learning patterns of the human brain, the machine could detect the edges of images and categorise them by shape into simple groups such as squares or triangles.

Although the basic tenants of object recognition have existed for decades, modern improvements in computing technologies have greatly improved the speed, accuracy, and versatility of object recognition software.

Machines are now almost as good as humans at object recognition, and the turning point occurred in 2014 during an international competition for object recognition software. The winner yielded one of the first algorithms capable of matching the speed and accuracy of trained human annotators within a 1.7 percent discrepancy.

Over the next decade, such programmes will continue to improve and become more commonplace across a wide range of industries including agriculture, security, and manufacturing. Still, much of the long-term potential of object recognition lies not in static image or video recognition but rather as an enabling technology which helps push the boundaries of emerging autonomous machines and platforms.

OBJECT RECOGNITION: WHERE IS IT GOING?

Next generation object recognition will enable autonomous systems to interact naturally with objects in an environment. Machines will not only be able to identify things, but also to understand their importance, purpose, and function. Improvements to object recognition algorithms will enable fully autonomous computer systems and machines.

In March 2017, Google unveiled its latest venture into object recognition with their Video Intelligence API. Most current generation object recognition software focuses on identifying items in still images. However, Google took a step forward by identifying objects present in video form. This advancement will enable search engines (like Google’s) to retrieve results based on content, not just titles and descriptions.

Similarly, IBM’s flagship machine learning platform, Watson, recently expanded its deep learning algorithms to assist with Visual Recognition. IBM hopes to use this technology to allow developers to create custom item recognition programmes which can be used across a wide range of industries.

Technologies related to object recognition have already begun to give machines an unprecedented degree of autonomy. Using today’s technologies, sense and avoidance systems are currently being deployed in self-driving cars, autonomous drones, and next generation air collision avoidance systems.

Focusing on using real-time object recognition to inform the decisions of autonomous computer systems, machines will make sophisticated predictions and recommendations while interacting with their environment instinctively and in ways not currently possible.

DRIVERS OF EMERGING AI TECHNOLOGIES

Greater visibility across the network infrastructure has never been more vital. With the increased amount of data being created by devices and the network infrastructure itself, new ways of gathering information are required. Among the drivers of emerging AI technologies that are currently gaining a lot of traction in the industry are as below:

♦♦Expanded cloud storage and wireless network speed. Cisco projects that by year 2020, 95 percent of workloads will be processed by cloud data centers with its storage expanding five-fold where cloud storage will account for 88 percent of total storage capacity. Both mobile and broadband average download speeds will also double globally by 2021.

Machines will have access to vast libraries of images and videos with which to cross reference unknown objects as storage and retrieval capabilities continue to expand. In turn, this will provide greater object recognition capabilities since they no longer need to rely on locally stored data only.

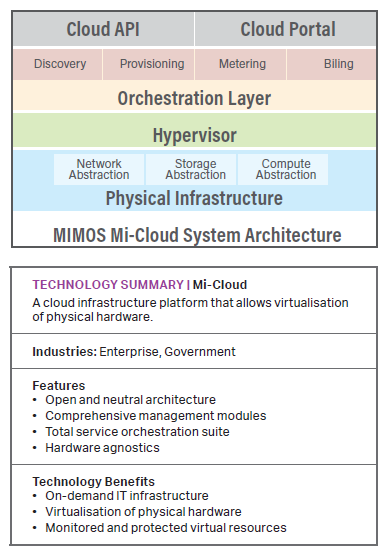

In Malaysia, MIMOS Berhad has embarked on a cloud infrastructure platform known as Mi-Cloud that is nondependent on any specific cloud hardware or software. The platform leverage on existing operational expenditure and allows complimentary infrastructure to be offered to end-users over the Internet in a simple and flexible way with reduced cost.

Created to be used across industries, the MI-Cloud is built on open source software thereby reducing the total cost of ownership via cost-effective licensing. Its open and neutral architecture allows easy integration with other systems thus enabling customisation by service providers. It offers secured technology benefits to corporations and end users on a subscription-based as-need basis over the Internet.

By 2025, the annual disruptive impact from AI could amount from USD14 to USD33 trillion, including a USD9 trillion reduction in employment costs. An outcome of this disruption is the displacement of human jobs by automation. Estimates for jobs at risk of displacement vary by country across the world. In the US, 47 percent of the workforce is estimated to be at risk, 77 percent in China and 69 percent in India.

♦♦Growing demand for automation. According to a 2015 study from Oxford University and Deloitte, 35 percent of all jobs in the UK could be automated over the next 10 years. Furthermore, a report from Merrill Lynch found that 45 percent of manufacturing jobs are likely to be automated by 2025, up from only 10 percent of currently automated manufacturing jobs today.

For machines to function in increasingly complex and sophisticated roles, they will need to have the tools necessary to interact with objects around them in real-time and under unexpected circumstances. A greater reliance on object  recognition software will be required to allow machines to not only sense their environment but also to quickly identify previously un-encountered objects and items

recognition software will be required to allow machines to not only sense their environment but also to quickly identify previously un-encountered objects and items

♦♦ Advances in machine learning (ML). While AI and ML often seem to be used interchangeably, explicitly two different things. AI is the broader concept of machines being able to carry out tasks smart daily tasks and ML is a current application of AI designed for machines to become more autonomous.

Two important breakthroughs led to the emergence of ML as the vehicle which is driving AI development forward include discovering it is far more efficient to code machines to think like human beings and secondly, emergence of the internet, and the huge increase in the amount of digital information being generated, stored, and made available for analysis.

According to IBM, by 2020 the amount of digital data stored globally is expected to exceed 44 trillion gigabytes. Increasingly advanced machine learning algorithms are in demand to assist with data management.

EMERGING OBJECT RECOGNITION AI APPLICATIONS

Artificial intelligence (AI) technologies are booming and will put humankind in front of unprecedented breakthrough of opportunities taking place at unprecedented speed. Although huge advancements in AI and ML have been seen, but the future may well deliver even more through new emerging AI applications to look forward to such as below:

♦♦Simultaneous Localization and Mapping (SLAM)

technologies provide cost-effective software-based solution to object recognition that is uniquely tailored to the needs of mobile robots and vehicles. According to John Leonard, professor of mechanical ocean engineering at MIT, “The goal is for a robot to build a map, and [to] use that map to navigate.” SLAM not only might help to lower the costs of object recognition, but its reliance on common hardware coupled with downloadable software could make it easier to apply to a wide range of existing machines.

Roomba, the domestic robot vacuum cleaner, uses SLAM technology to remember where it vacuumed last before heading to its dock to recharge. Researchers at MIT developed a method that uses a single monocular camera and object recognition software to operate at nearly the same level of precision as its more expensive cousins which use a range of purpose built sensors and cameras.

Roomba, the domestic robot vacuum cleaner, uses SLAM technology to remember where it vacuumed last before heading to its dock to recharge. Researchers at MIT developed a method that uses a single monocular camera and object recognition software to operate at nearly the same level of precision as its more expensive cousins which use a range of purpose built sensors and cameras.

♦♦The US military’s growing reliance on unmanned drones allows US forces to collect an unprecedented amount of surveillance data. However, the sheer amount of data quickly outstripped the capabilities of teams of human analysts tasked with sorting through all the information available to them. In 2017, the US military began using object recognition algorithms in its Algorithmic Warfare Cross Function Team to rapidly sort through video and images collected by unmanned drones to track ISIS troop movements and weapons shipments. By adding a mere 75 lines of code to existing programmes, US military officials have successfully been able to use object recognition software to positively identify emplaced weapons and structures.

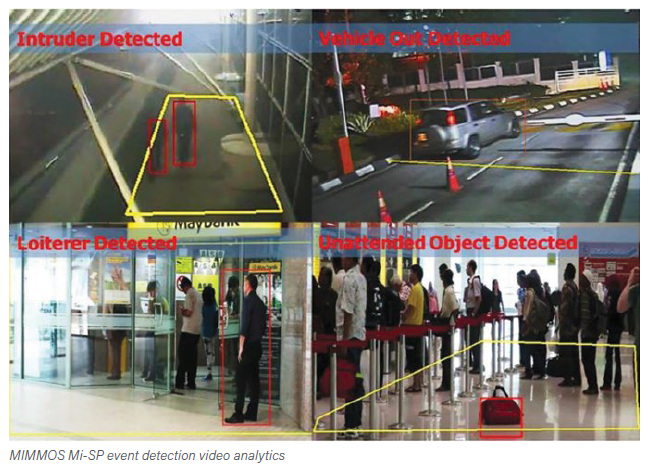

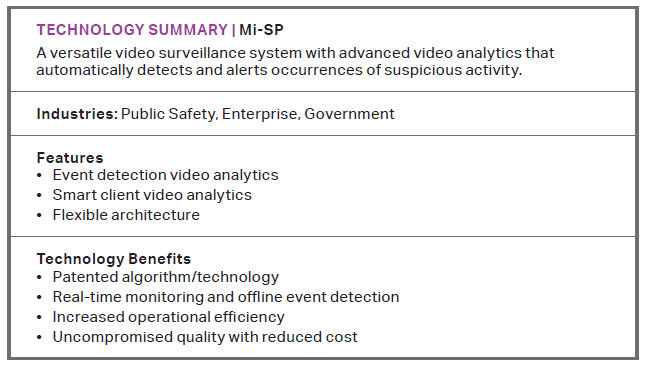

♦♦MIMOS Mi-SP is a versatile video surveillance system that includes intelligent elements of advanced video analytics. With Mi-SP, suspicious events can be detected by video analytics and an alert will be generated to alert the security personnel, thereby increasing the situational awareness of an entire organisation. Mi-SP is efficient, flexible, and can be integrated with existing video surveillance systems.

♦♦The future of the self-driving vehicle industry lies in object recognition technologies, as it is the key to success for autonomous cars. Vehicles must not only differentiate between items such as traffic signs, pedestrians, and vehicles but also provide the information necessary to make real-time decisions needed to keep motorists safe. There is currently no industry standard approach to object recognition. Instead, several competing approaches are being explored concurrently. One approach relies on computer-learning techniques collectively known as deep learning which use networks of computer processing nodes to mimic the human mind.

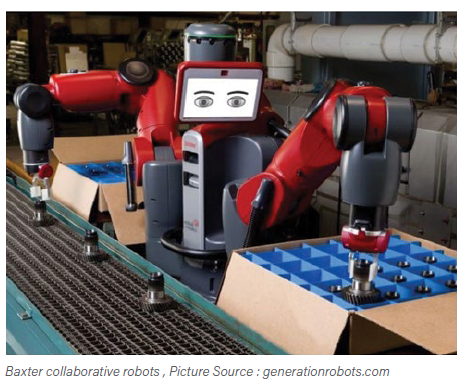

♦♦ Originally designed for manufacturing purposes, low cost humanoid Baxter robots are currently being used by the University of Maryland to demonstrate how computer learning pared with object recognition can train robotic assistants. By showing YouTube videos of simple tasks being performed, researchers at the University of Maryland have successfully taught Baxter machines a variety of tasks ranging from pouring water into a moving container to preparing basic meals. By relying on contextual object recognition, Baxter is able not only to identify an item in the video, but to also mimic its use. Although in its early stages, contextual machine learning could allow companies to train robotics through traditional human training methods.

♦♦ Originally designed for manufacturing purposes, low cost humanoid Baxter robots are currently being used by the University of Maryland to demonstrate how computer learning pared with object recognition can train robotic assistants. By showing YouTube videos of simple tasks being performed, researchers at the University of Maryland have successfully taught Baxter machines a variety of tasks ranging from pouring water into a moving container to preparing basic meals. By relying on contextual object recognition, Baxter is able not only to identify an item in the video, but to also mimic its use. Although in its early stages, contextual machine learning could allow companies to train robotics through traditional human training methods.

BUSINESS IMPLICATIONS OF AI

Artificial Intelligence (AI) already plays an important role in our economies. Even robots and algorithms now do most of our stock trading. Numerous startups and the internet giants are racing to invest in the technology. There is a significant increase in adoption by enterprises. This does not come as a shock as surveys have found last year that 38 percent of enterprises are already using AI, growing to 62 percent by 2018. It is estimated that the AI market will grow to more than $47 billion in 2020. Below are among the business implications that are developing parallel with the AI boom.

♦♦Robots and drones will be used to map homes, retail locations, and more to identify what is in them, as well as what is not (potentially creating a treasure trove of marketing data to help companies sell new products and services), the same way Google has driven cars around cities to map streets.

♦♦With the ability to recognise objects and situations, domestic robots (like Roomba) will be able to recognise lost items (such as jewellery or keys) and notify owners when such items are found. By recognising the sound of a glass breaking as it hits the floor (or even seeing a child run with a cookie), such robots would also be able to anticipate the need for clean-up. The global market for personal robots is predicted to reach $34.1 billion by 2022.

♦♦Object recognition technologies are advancing exponentially and will drive new creative AI abilities in fields such as fashion, furniture, and art. Organisations that rely on human creativity for design may find such technologies able to feed and inspire human creatives.

♦♦Enabled with real-time object recognition, autonomous robots may be used in hospitals and healthcare facilities to monitor (or even diagnose) patients in waiting rooms and intensive care units.

♦♦Corporations and governments will increasingly look to use object recognition software for security and surveillance networks. Advanced security systems will not only be able to identify faces, but also learn to recognise suspicious behaviour and allow for real-time passive monitoring and threat categorisation (such as identifying the clothes seen on a reported suspect).

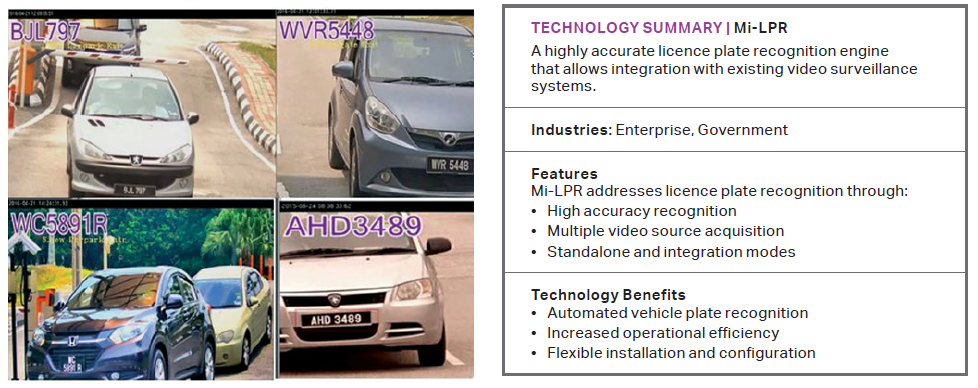

♦♦MIMOS Mi-LPR is a scalable automated licence plate recognition platform that processes and analyses video from vehicle surveillance systems. The platform can be integrated with existing vehicle management systems to provide real-time alerts as well as forensics capability to retrace events. Audit trails can be generated from records on vehicles entries and exits. Mi-LPR also provides instant checks on vehicle registration numbers against watch lists. This enables authorities to intercept and stop vehicles, check them for evidence and, where necessary, make arrest.

♦♦Behaviour recognition will also be used for market research purposes, such as categorising consumer behaviours in retail environments to optimise the retail environment for consumer happiness.

♦♦As artificial intelligence and object recognition technologies continue to improve, autonomous machines will be used in search and rescue operations, especially where conditions are hazardous for human beings.

♦♦Despite using more than half a million tons of pesticide each year, insects and other pests continue to destroy 37 percent of potential food production in the United States. Passive monitoring of crops can be used to identify pests and fungus in an agricultural setting while crops are growing, as well as during storage or transport. By specifically targeting the fields or rows that are at-risk, companies can reduce costs and environmental impacts associated with pesticide use. Further, computer learning can be leveraged to target specific species identified in a location without human interaction.

♦♦The ability of robots to recognise objects and interact with them will also drive adoption of more service robots in commercial and industrial workplaces. In retail, such robots will be used to audit inventory, restock shelves, and deliver items to customers.

RISKS AND CHALLENGES OF OBJECT RECOGNITION

There are certainly many business benefits gained from AI technologies today, but there are also obstacles to AI adoption that can be further explored. For example, cameras and sensors needed suffer the same challenges as human eyes to identify objects in real-time when visibility is reduced due to poor lighting, dust, rain, or when viewing a scene from an unexpected angle. Infrared and night vision cameras can help to mitigate some of these limitations. However, there will be times when conditions are too poor for machines to operate autonomously.

Object recognition has improved accuracy rates in computer vision and even enabled machines to write surprisingly accurate captions to images but still stumble in plenty of situations, especially when more context, backstory, or proportional relationships are required. Autonomous machines currently rely heavily on onboard hardware for processing, memory, and energy storage. Miniaturising these systems will require dramatic improvements to network speeds and cloud computing capabilities.

Computers struggle when only part of an object is in the picture—a scenario known as occlusion—and may have trouble telling the difference between a statue of a man on a horse and a real man on a horse. Also, computers are unable to identify some images that may seem simple to humans. Pictures such as a picture of yellow and black stripes, may appear to a computer to be a school bus.

Furthermore, object recognition systems in AI are vulnerable to manipulation. Researchers have found that by altering specific pixels in images, they can intentionally change what the system sees. In the real world, it could mean altering a stop sign in a way that would be nearly imperceptible to a human, but an autonomous vehicle might see it as something else or not see it entirely.

What computers are better at is sorting through vast amounts of data and processing it quickly, which comes in handy when a radiologist needs to narrow down a list of x-rays with potential medical maladies or a marketer wants to find all the images relevant to his brand on social media. Computers may be able to only identify simple and basic images (such as a logo) but they are identifying them from a much larger pool of pictures and doing it at a speed faster than humans are capable of (and not getting bored of it).

In the end, the promise of object recognition and computer vision at large is massive, especially when seen as part of the larger AI pie. Computers may not have common sense, but they do have direct access to realtime big data, sensors, GPS, cameras and the internet to name just a few technologies.